A little over a week ago, I completed the first version of a script (in the form of a JavaScript bookmarklet) that allows you to download your Google Web History. Shortly afterwards, I posted some comments on a few other pages with similar scripts. Someone saw one of my comments and posted a comment on my article (posted as metal123, this person normally goes by the handle “Naka”).

A little over a week ago, I completed the first version of a script (in the form of a JavaScript bookmarklet) that allows you to download your Google Web History. Shortly afterwards, I posted some comments on a few other pages with similar scripts. Someone saw one of my comments and posted a comment on my article (posted as metal123, this person normally goes by the handle “Naka”).

Naka informed me of additional parameters for the Google Web History RSS feed of which I was unaware: yr, month, and day. I may have actually seen them earlier, but their significance and how I could use them did not dawn on me until I read Naka’s comment thoroughly. There was a major problem with the first revision of my script: it would only successfully obtain about 4k or so records of history.

No matter what I did with the parameters I was using (namely num and start), the script would only download a partial history. However, with some modifications to the code, I was able to take advantage of the date parameters, and update the script to download a Google History in its entirety.

Installation and Usage

The usage is the same, with the exception of some additional new features, which are fairly easy to use (you will need Flash for it to work). To use it, drag and drop this bookmarklet to your bookmarks bar:

[raw]

[/raw]

Then visit https://www.google.com/history, log into your Google History, and click the bookmarklet. Accept any warnings that may come up about insecure content being loaded (it loads some JavaScript and Flash movie from my server), and click the bookmarklet again to begin the download process. For more info about the security dialogs you may encounter, refer to the usage section of the original post.

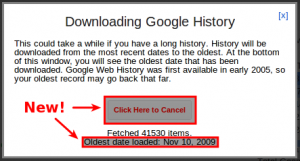

Please note that no private information of yours is ever transmitted/received to/from any server other than Google’s. Read the privacy info on my Google History Download script to learn more. The new download dialog box looks like this:

Be prepared to wait quite a while for your full history to download, if you want to get it all. Just to give you an idea of what to expect: I have over 40k searches and it downloaded over 135k records and the CSV was about 28MB in size. If you get sick of waiting, click the Cancel button and get what has been downloaded so far.

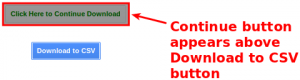

Notice that a cancel button has been added, as well as a note at the bottom of the window indicating the oldest record that has been downloaded. When you cancel the download, you can download a CSV with the records completed thus far. You’ll also be given the option to resume your download:

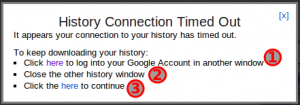

If ever your Google prompts for a password on the RSS feed behind the scenes, a dialog will appear with instructions for logging back into your Google Web History and resuming the download:

You may see this dialog if you have your history up and are idle for a while. It does also appear sometimes when a lot of requests are made to the RSS feed (which the script does indeed do).

New features

I’ve included a number of improvements in the new version:

- Now obtains the entire Google Web History

- Given that it may take a long time to download one’s entire history, I’ve added a cancel button

- There is also a button to resume, if the download is cancelled

- History login timeout is more gracefully handled, resuming where it left off after the user logs back into history using another browser window

- As records are being downloaded, the oldest date loaded is displayed at the bottom of the window — this helps to know how much history has been gathered as it progresses

Google Web History RSS Parameters

Here’s the full list of RSS feed parameters of which I’m now aware, including additional ones Naka mentioned:

- num: Number of records to output (1000 is the most you can get a time)

- start: The record number starting point (starting from 1) — Note, using num=1000 and incrementing the start parameter by 1000 will only get you so far (to about 4k records or so)

- month: 2-digit month

- day: 2-digit day of the month

- yr: 4-digit year

- max: Some kind of modified UNIX timestamp or something — Not particularly useful without fully understanding what it is, and it appears to have some of the same limitations as start

- st=web: Limit to web search

- st=img: Limit to image search

- st=frg: Limit to product (formerly known as Froogle) search

- st=ad: Limit to sponsored ad links

- st=vid: Limit to video search

- st=maps: Limit to map search

- st=blogs: Limit to blog search

- st=books: Limit to book search

- st=news: Limit to news search

Additional Technical Information

The new version of my script starts off by downloading the first 1000 records as it did before. However, from that point on, it loads the next 1000 records by setting the yr, month, and day parameters to the date of the 1000th entry. I did run into some odd problems with consecutive days with a lot of history.

In a few instances on days with a lot of history, the script would obtain the date of the 1000th record to load the next 1000. However, instead of starting on that day, it would start on the prior day. Then the original day would be the 1000th record again, and the script would end up in an infinite loop.

For example, when the starting date for the feed was set to July 1, 2009, the date of the 1000th record for that request was June 30, 2009. However, when the RSS feed parameters yr=2009&month=06&day=30 were used to load records starting from June 30, the RSS feed once again started with July 1. This resulted in an infinite loop, because the date of the 1000th record was June 30, 2009 and the feed parameters were being set to yr=2009&month=06&day=30 over and over again.

To avoid this infinite loop, I put in some checks for the prior date that was loaded and if it is the same as the 1000th entry, it decrements the date by a day. Unfortunately, this could potentially result in some records in the download being lost. However, this is a lot better than an infinite loop. Fortunately, the occurrence of this problem appears to be seldom. The code also checks for repeat history entries (by checking the date/time), to avoid duplicity in the results.

http://www.onlineconversion.com/unix_time.htm

Take the first 10 digits of the max parameter and put them in there, this gives the conversion. Google’s older/newer and calender buttons use this. Not useful indeed.

I was in the process of trying to make it export xml… (thinking I wont bother, got all my history the hard way)

I noticed the script doesn’t save the guid, query_guid, video_length, img_thumbnail. Thumbnails are nice if you’re making it display in a search engine like I’ve done and query_guid and guid connect each other so you know which links you clicked on for each google search. Video_length might not be useful but why not right.

Thank you, worked like a charm. https://twitter.com/#!/eklipsi/status/172333391180595200

Thanks! have a TON of new data to play with!

Just wanted to add some useful input to this.

Theoretically if someone still wanted immediate access to their web history they could use either greplin or cloudmagic and import the csv into evernote somehow or somehow even mail a large email to themselves using yahoo.

I’m pretty sure both services use a backend services such as lucene and hadoop which make searching incredibly fast.

However actually loading the file locally (for some who have large web histories) i would imagine would be incredibly painstaking.

If someone has a alternative to xcel that has a tiny footprint it would be incredibly helpful.

Exporting to CSV was the easiest option, because it didn’t take much to combine all of the records into a flat file, using the Downloadify JavaScript library. If there is a JavaScript library for creating Excel files, it could be utilized in my script to output to Excel. I have converted data into Excel before, but only using PHP on the server-side.

I have my doubts as to whether or not there is a library create Excel files within the browser. Unfortunately, unwieldy as it is, I think that creating a CSV in the browser window may be the best option as far as keeping it all within the browser.

Thanks for sharing your thoughts.

Thanks for the reply,

What are your thoughts on GoogleVoice ‘s spam inbox not being exported with takout data ?

Would there be a non exorbitant way of exporting that data as well ? …As well as a non exorbitant way of deleting entire call logs in any folder that would require it ?

Then I would also like to look at the ethics of all of this.

I see very serious EXTREME ethical concerns on both sides of the coin.

Perhaps the main question should be,… and probably no one has really gone into depth with…..

Just what IS google’s intentions with the privacy policy changes ?

COULD this truly meant to be a convenience to the user or is there some fine print that now will give them more control of my information ?

If the latter than I can see behond not just GV but also android and GD users who will have very few options of which avenues to take in the future.

Again, thanks for the quick reply!

XIPRELAY

I haven’t tried takeout for exporting data. Perhaps their thought is no one would be interested in exporting “spam”. However, in the case of phone numbers, it would be useful if you ever want to create a list of callers to avoid.

Extracting the data probably could be automated by scraping the Voice website itself, but it would take a lot of work and it could break if ever they change the look/feel of the site. There’s a lot of chatter here:

http://www.reddit.com/r/technology/comments/q107c/remember_to_go_to_googlecomhistory_and_remove_all/

That the real reason to consolidate the privacy policies is to allow uniform access to data across all their services. I wouldn’t be surprised if that was the case, because collectively they have a lot of very valuable user data.

While i have the floor here as well.

Has anyone else noticed that google takeout doesn’t export anything in the googlevoice spam section.

A tool to do just that would also be incredibly helpful!

EDIT:

In a pinch i suppose you could simply move everything from the spam folders to your inbox if you are desperate, I wonder if you could simply do this then hit the undo button once googletakeout is finished.

No, I’m doing nothing with it. You will need a Flash-enabled browser for it to work. If you’re using iOS (iPad, iPhone, or iPod Touch) it will not work. If you have Flash disabled on your browser, you’ll need to enable it for it to work. A small Flash file is what makes it possible to convert the data to CSV within your browser, without having to transmit it to a third-party server.

Very odd… Do you have Flash installed on your computer? You need Flash, because there is a small Flash file that helps make it possible to convert the downloaded data into a CSV file.

I have the same problem as the above guy. Where does the downloaded data go to? Can I just save it somehow in the cache and leave the converting into a CVS file for later? I REALLY need to just get the data today.

The CSV data is stored in a JavaScript variable called output. You could output it all to a new window. Here’s the URL for a bookmarklet you can create that will do it for you:

javascript:void(function(){newwindow=window.open(“”,”Output”);newwindow.document.write(“<title>Output</title><body><pre>”+output+”</pre></body></html>”);newwindow.document.close();})();

Create a new bookmark and use that code for the URL. It should open a new window with the CSV output. You would then have to copy/paste that into a new text file and save it as a .CSV.

I tried… but it just opens a new tab…

Let me see if I understand this. I go to my history, I open your original bookmarklet, then once the data is fetched I open the bookmarklet you in your comment?

When I click the bookmarklet linked to the code that you gave me, it always just opens a new tab…

I know you can do some js magic. help me! thanks!

There must be something odd in the search history that is tripping up my code and preventing it from ever getting to the CSV generation code, which creates the output variable. That’s why you’re getting a blank page, because I haven’t yet passed the data to the CSV creation function, which is why you’re never seeing the button.

Unfortunately, it’s very difficult for me to debug without being able to reproduce the problem. :-/metal123 is working on a modification of the script to include some additional data, but it is crashing on him. Perhaps I might see the issue in reviewing his code and fixing his problem, but I cannot promise anything.

I’m not sure, it could have something to do with the way the flash block is implemented. Try disabling all your flash block extensions, enable flash, clear your cache, and try again. It could be that with the flash block enabled, something was cached that is messing things up.

javascript.void(0) is what appears on the status bar when I hover over the “click here to cancel” button.

1. The fetching eventually gets stuck around a certain date

2. When I click “click here to cancel” nothing happens at all

3. How the hell do I get the CVS file?

Thanks. DUDE TODAY IS THE LAST DAY HELP ME

I’m not sure why it’s not working. If you have Flash installed and enabled, when you click cancel, you should see the screen with some summary stats and the Download to CSV button down below. I’ve gotten it to work in Firefox and Google Chrome without a problem.

You’ve done great work with this, and I’ve already passed on your link to quite a few people. Thanks for putting this code out into the world!

Question: it seems that what is sacrificed by deleting the entire google search history is the ease of searching, viewing, and using the data ourselves. I’m not an Excel wizard or a data-visualization wizard, but i was wondering if someone who is could explain the best way to download the data and then reconstruct it to be viewable in as convenient and searchable a form as on the google page itself. Ideally I could continue to use this data easily even after i delete it.

On the other hand: Let’s say google just retains the data anyway after we delete it (and I have a more than nagging suspicion that they do, because data mining is the core value of their entire business model) – wouldn’t the only effect of deleting the search history be that we no longer have access to it in such a “pretty” form, but they still do? If my theory is true then there’s no real benefit to deleting the history, other than the idea that google might share the data more easily among google’s own services, and is that such a bad thing?

If it was possible to download all the data that can be seen on the google web history page, and then display and use it in the same way, I’d be more likely to delete it, especially if they’re likely to just keep it anyway.

Anyone else agree?

You’re right, unfortunately CSV isn’t the most convenient form for visualization or searching. You could try importing the file into Google Fusion tables, which would provide better accessibility.

Thanks for the tip. If I opt not to put my web history back into another google service, I can see a lot of value in using Google Fusion tables for less sensitive data I’m working with. Today’s the last day and I still haven’t decided whether it’s worth it to remove the history.

Thank you very much! Works like a charm!

I believe there are tools for exporting Gmail. You could also log into your Gmail using IMAP and download your history that way. All of your chat history should reside within one of the folders.

Pingback: numeroteca » Downloading and erasing you google search history #unGoogle

are they also going to “share” our google chat history?

In case you haven’t seen my comment, you should seriously consider adding guid and query_guid so you know which link was clicked from which google search, the script is flawed without this. video_length and img_thumbnail are optional.

I tried editing the javascript myself but running the edited version causes the Download CSV button to disappear. This may mean the other users who can’t get the CSV button to appear may be experiencing an error with the script or perhaps their Google History is causing the script to die.

For those of you who are truly desperate to archive and delete your history before the policy change, TRY A DIFFERENT COMPUTER.. Or as a last resort download http://nakas.info/bashhistory.rar and run “bash.exe scipt.sh” from command prompt (find wget and bash EXES in google to be safe, replace the ones I have). Keep editing the script and running it until you get proper output with no small or 0KB files. Email me at [email protected] and I’ll give you PHP to convert the output to MYSQL and view it in a PHP search engine when it’s ready.. Obviously you would have to be computer competent, you wouldn’t want me to be malicious and steal your history from under your nose.

Yeah, there must be a bug somewhere that I’m not seeing when I extract the data from my account. I wonder if there’s something in my regular expressions that’s getting tripped up by characters within certain records or something.

Can you tell where it’s crashing on you? Can you post a URL to your modified JS file to obtain guid and query_guid? I can take a look and see if I can figure out where things are going wrong.

guid and query_guid look like this in the XML

EfafafagagagauajwdhwaCA

4DAwadjawdawd8waDaw

The tags are different which is why it’s not getting picked up by my attempted quick fix

https://nakas.info/GoogleHistory/google-history.js

Might as well not even look at this script though, all I did was add GUID/query_guid, it won’t work at all until (I assume) the tag stripper recognizes the tags.

No, your script was pretty solid for the most part. The only problem was where it was trying to run the .replace() method for undefined properties in the object containing the feed data.

I’ve made some updates by making it a bit easier/more robust to add new parameters. Now, I extract the headers for each data item, and assign the properties for each datum according to the extracted headers. If a header isn’t found, it simply assigns an empty string to that property.

Try replacing the JavaScript URL string in my bookmarklet to /tools/google-history/google-history-v2.1.js to test it out.

I’m not sure if the changes will fix the problems Erjoalgo has been experiencing, but it is possible they may. After I get confirmation it’s working for you, I’ll update the main script to v2.1. Thanks for your help with this!

D’oh, I just read that you deleted your history yesterday, so I guess you’ll be unable to test. Perhaps v2.1 will work for Erjoalgo now though.

Nope /=

If I do “click here to cancel” before a certain fixed month not too long ago, I do get the prompt to download CVS. After that month I do not.Unfortunately by the time I have time to debug this March first will have passed and horrible things will have happened to me.

It must be something odd with the data beyond that month that is breaking the script. Does it appear to go all the way back to 2005, but just break when it’s creating the download button? If it does go all the way back to 2005, does it output the stats info at least? This may help me to figure out more or less where it’s breaking. If it gets to a certain section of stats info where it dies, let me know where that’s happening.

it doesn’t go all the way to 2005, stops around 2006. Again, the stats only show up if I hit stop before October 2011 or so, which isn’t very useful.

Anyway, I already deleted my data. I wrote up some java code to figure out the days which were missing, and kept feeding that into a bash script similar to meal123’s. Had I done that from the beginning, I wouldn’t have wasted so much time!

Now I just need to write some code to eliminate redundancy and compress out the xml tags, which I have no hurry to do.

If you still want to figure out why your code fails on some data, I’ll figure that out… eventually

Thanks!

Yeah, let me know if any oddities in the data stick out at you. Perhaps some special characters, empty records, or something is tripping up my code.

It looks like it’s working, but why does the command line keep spitting 401 unauthorized? By the way, I’m not computer-retarded, just don’t have time to learn about the details of these RSS parameters and sheet.

Also why did you skip half the days of the month?Thanks for your script. At least it works, unlike geeklad’s fancy js sheet!

His JS works fine, I would suggest trying a different computer, if you want to help you could let him know with maybe a javascript console where it’s getting an error.

401 unauthorized is when you have the wrong username/password in there. If that’s not the case (and only SOME output is 401) then Google is blocking you for downloading too much (I’m assuming) you’re just going to have to keep editing the script until to go over the parts you missed so you can get all the history. It skips half the days because it downloads two days at a time.

The XML->MYSQL gets rid of the huge amount of duplicates in the output.

Yeah, I’m sure he put good effort into his JS, but its failing for some reason, and it doesn’t even let me get a hold of the partial output because I must wait for his user friendly CVS data converting function to show up.

Yeah, I’ getting SOME output with 401. “Google is blocking you for downloading too much” ? I mean either the password is correct or not?What do you mean keep editing the script? What parts of the script could I edit? RIght now I don’t care about any amount of duplicates, but I do care about not missing anything. You’re sure it downloads 2 days at a time?

Yes, it downloaded around the same amount of records as geeklad’s script (a little more actually due to picking up different categories I assume, but hey, I can’t be arsed looking for why)

Change the “for YEAR in ….” and “for MONTH in… ” and “for DAY in…” so that the script ONLY downloads the days that came out as 401s in the files (the filenames have a datestamp in the format $YEAR$MONTH$DAY). For example if you’re getting 401s on December of 2011, you would change the script to “for YEAR in 2011” “for MONTH in 12” “for DAY in (all days)”Keep at it and you will have all your history, check the files carefully to confirm! Mine all came out, I’m really happy about it.

It doesn’t technically download multiple days at a time but as you can tell by the parameter “&num=1000” it downloads 1000 records for every second day of every month of every year. If you googled more than 1000 results in 2 days then it wont pick them all up (UNLIKELY)

why so much 401 fuuuuuuuuuuuuuuuuuu

You’re almost there… In the CSV export method is dying where the script attempts to call the .replace() method on the .video_length attribute when it is undefined. You’ll need to add some checks for when any of the attributes are undefined them. The easiest thing would be to assign an empty string (“”) so that the .replace method will not fail.

Haha, good luck, I deleted my history yesterday night. You should strip out the video_length and release it at least, someone tech savvy would want and be able to use the guid.

Every time it reaches a certain number of entries, it freezes. I tried this on a mac and windows computer. Is this a memory issue?

It’s more likely caused by a bug in the script… There have been some people that have encountered issues with it, but unfortunately I haven’t been able to reproduce the problem with my history. I was able to download over 130K records without issue.

Damn… I wish another JavaScript developer would run into the same issues w/ their own history so they could help me debug. The one disadvantage of the fact that this script does everything within the browser, is I cannot debug it when it encounters histories that break the script. 🙁

Pingback: איך להוריד ולשמור את היסטוריית הגלישה והחיפוש שלי בגוגל למחשב האישי שלי? - ITTV.co.il

Pingback: איך להוריד ולשמור את היסטוריית הגלישה והחיפוש שלי בגוגל למחשב האישי שלי? – ITTV.co.il

I’m on https://history.google.com/history/ in Chrom on my mac and i click the bookmarklet and … absolutely nothing happens. No warnings. Nothing.

Any tips? VERY excited to get this working!

Hey Geeklad,

When I try to get on https://www.google.com/history/, Google automatically redirects to https://history.google.com/history/ and your script warns:

“Please visit https://www.google.com/history/ and log into your Google Account. Then click the bookmarklet again.”

I’m a little illiterate when it comes to web programming. Can you release an update to fix this?

Thanks!

Pingback: update bookmark blog 02/10/2013 (p.m.) | Kevin Mullet's Bookmarks

Hi, I am having a similar problem as David Boyle: when I pull up Google Web History and then click the bookmarklet, absolutely nothing happens. If I click it on any other web site it tells me I’m in the wrong place, but it doesn’t seem to be doing anything for me… :/

I am on Windows 7 SP1 x64 running Chrome Version 24.0.1312.57 m

Hi, I am having the same problem as David and Denver. The problem is that the URL has changed from google.com/history to history.google.com/history and the script does not parse the updated URL properly anymore. Could you please change the script to recognize the new URL? This should require just a quick fix in the regex. Thanks!

You have to update the script.

Change “https://www.google.com/history” to “https://history.google.com/history/”

Please…

vizual basic

wow awsome topic of the day great i found this article bookmarking it

visual vasic

great post by the author nice job!

Unfortunately, like David Alexandre, I am late to the party; please can you update your script to allow for its continued use?

On using this with Firefox:

1. the security exception can’t be done without extra work. It pops up, and allows you to make an exception, but it won’t apply it for the next time you try the bookmarklet. You need an addon to this this: https://addons.mozilla.org/en-us/firefox/addon/toggle-mixed-active-content/

2. the domain still needs to be changed. AFAIK, it has not yet been updated to resolve this yet.

Geeklad, please update the script! Please… I am desperate.

Is GeekLad still alive?

Please update the script. I am considering divorcing Google, but I want to keep all my data first.

Gives an error message always:

Instructions

Please visit https://www.google.com/history/ and log into your Google Account. Then click the bookmarklet again.

I use Chrome with Windows 8.

Do you have any solution?

Hello! I’ve been following your blog for some time now and finally got

the courage to go ahead and give you a shout out from Houston Texas!

Just wanted to mention keep up the excellent work!

Sadly, this no longer seems to work.